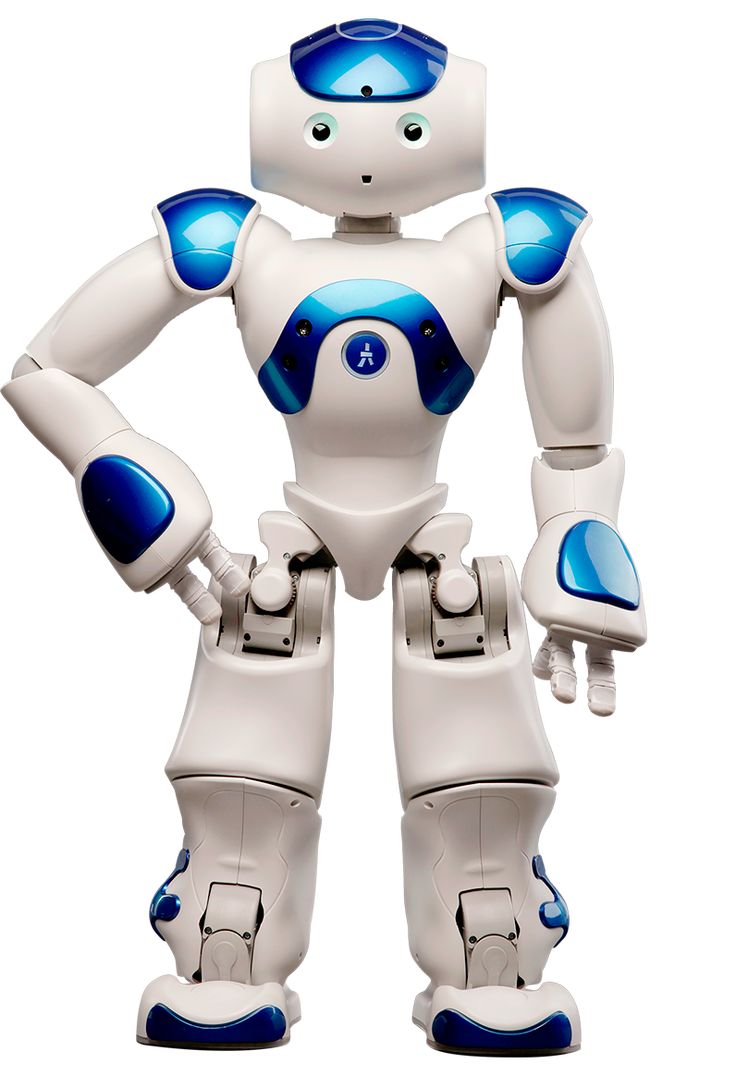

**Google DeepMind said its latest Gemini Robotics models can work across multiple robot embodiments. | Source: Google DeepMind

Google DeepMind has introduced a new version of its multi-modal Gemini 1.5 model, which, according to the developers, is capable of controlling robots almost like a human. The key word here is "almost". The system supposedly understands complex commands in natural language and can independently plan the robot's actions. So now you can just say "clean up the kitchen," and the robot will... He'll think for a couple of seconds and ask you exactly what you meant.

The pride of the developers was the contextual window of 1 million tokens — the ability to process huge amounts of information. In practice, this means that the robot will be able to "remember" the entire history of your interactions with it. It's scary to imagine what will happen when he starts remembering how you yelled at him last month for spilling coffee.

Engineers are particularly enthusiastic about the "agency experience" — the ability of AI to independently break down complex tasks into simple actions. For example, at the command "make breakfast", the system itself should understand that it needs to find a refrigerator, take eggs, turn on the stove... Although, to be honest, most people are already doing a good job of this task without the help of artificial intelligence.

Interestingly, not only texts and images were used to train the model, but also data from robotic arms. It turns out that Gemini 1.5 knows about the world not only from books and the Internet, but also "by touch" — however, so far only through the prism of sensors of industrial robots. It's unlikely that this will help her understand why the cutlet should be juicy rather than perfectly round.

The developers are modestly silent about how much energy such a system consumes and how often it makes mistakes in real conditions. I would like to believe that the Gemini 1.5 robot will at least not confuse sugar with salt — although, given the experience of previous AI systems, this is an optimistic scenario.

As noted on jobtorob.com Perhaps soon we will have to master the profession of a "wish explainer" — a person who knows how to formulate commands correctly for capricious AI. However, given the pace of technology development, it is possible that in a couple of years this profession will become unnecessary.

The funny thing about this story is the seriousness with which the developers talk about the system's capabilities. It seems that they themselves believed that their creation really understands the world as a person, and not just skillfully imitates this understanding.

However, progress cannot be denied: if robots used to be blind performers of pre-prescribed commands, now they can at least try to understand what they want from them. The main thing is not to trust them yet, nothing serious. Especially breakfast.